Single Model vs. Multi-Model: Betting Accuracy

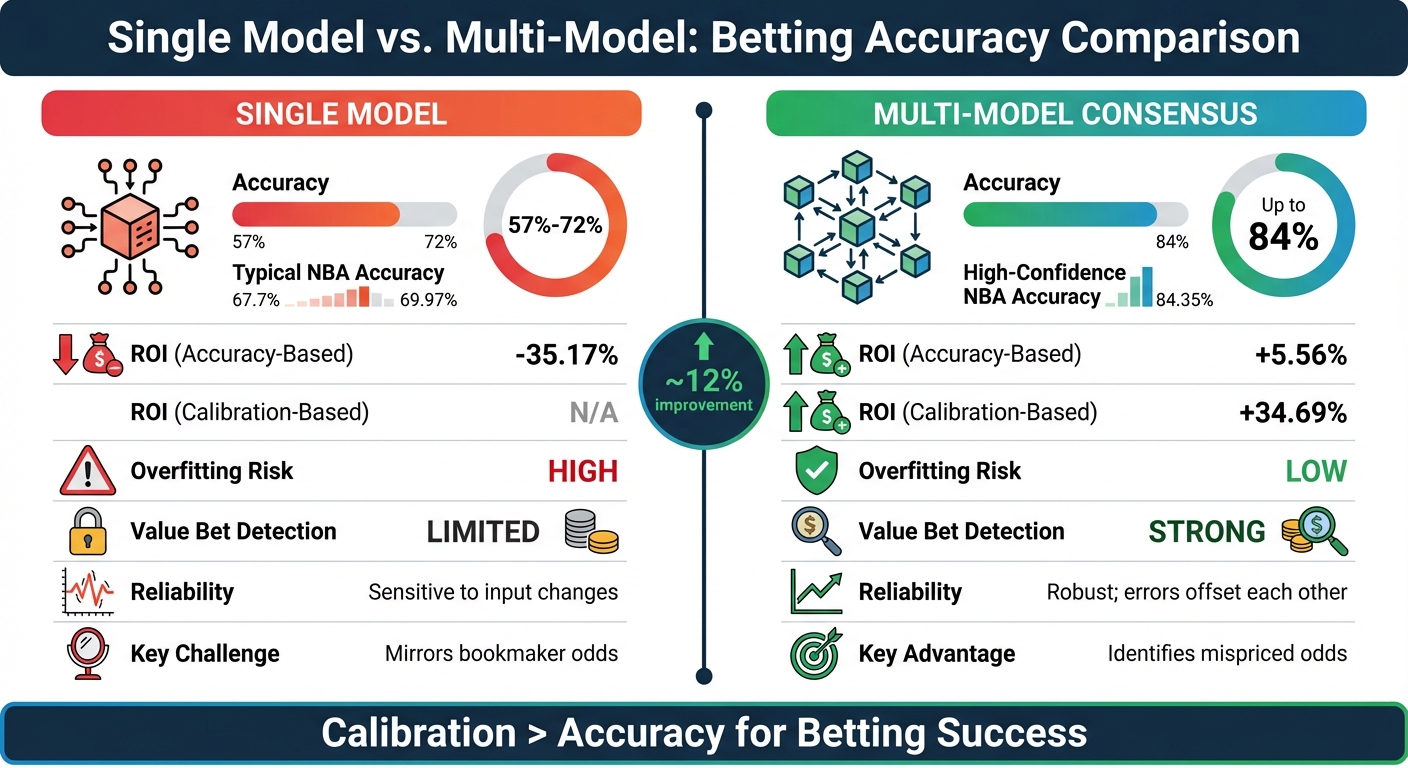

Which system is better for betting predictions? Multi-model systems outperform single models by combining multiple algorithms to reduce errors, improve calibration, and identify value bets. While single models are simpler and achieve accuracy rates of 57%-72%, multi-model systems can reach up to 84% accuracy and deliver higher ROI, especially when focusing on calibration rather than raw accuracy.

Key Takeaways:

- Single Models: Easier to implement but prone to bias, overfitting, and inconsistent results. Accuracy often aligns too closely with bookmakers, limiting profitability.

- Multi-Model Systems: Use diverse algorithms to improve reliability, reduce errors, and uncover mispriced odds. Calibration-focused systems deliver ROIs as high as +34.69%.

Quick Comparison:

| Metric | Single Model | Multi-Model Consensus |

|---|---|---|

| Accuracy | 57%-72% | Up to 84% |

| ROI (Accuracy-Based) | -35.17% | +5.56% |

| ROI (Calibration-Based) | N/A | +34.69% |

| Overfitting Risk | High | Low |

| Value Bet Detection | Limited | Strong |

If you're serious about improving betting outcomes, focus on systems that prioritize calibration and combine multiple models for better accuracy and reliability.

Single Model vs Multi-Model Betting Systems: Performance Comparison

Performance of Single-Model Approaches

Accuracy Metrics for Single Models

Single-model systems show varying levels of success depending on the algorithm and the sport they’re applied to. For example, in NBA betting, logistic regression, a widely used method, achieves an accuracy of about 69.97%. Other algorithms, like multi-layer perceptrons and support vector machines, perform similarly, with accuracies hovering around 68% and 67.7%, respectively.

Some advanced single-model systems can push accuracy as high as 84.35% for high-confidence NBA predictions. However, most automated AI-driven betting tools typically fall within the 50% to 60% accuracy range, which limits their reliability.

But accuracy isn’t the only metric that matters. For instance, a gradient boosting model designed for English Premier League predictions achieved a Ranked Probability Score of 0.2156 during the 2014–2015 and 2015–2016 seasons. Despite incorporating advanced feature engineering, it still lagged behind bookmakers like Bet365 and Pinnacle, which scored a superior 0.2012 during the same period. These numbers underscore the practical constraints single models face, even when they perform well in theory.

Challenges of Single-Model Predictions

Single-model systems come with their fair share of challenges, which limit their effectiveness in betting scenarios. One major issue is overfitting. Complex models, such as artificial neural networks, often memorize historical patterns too well, leading to poor performance when faced with new, unpredictable situations like player injuries or lineup changes. A model might excel with past data but crumble when real-world variables shift.

Another challenge is their sensitivity to incomplete or noisy data. Sports data is often sparse, changes rapidly, and lacks context on factors like rivalry dynamics or unexpected team adjustments. This lack of diverse inputs can make single models inconsistent. On top of that, manually analyzing single models can be incredibly time-consuming - taking anywhere from 30 minutes to several hours per game - and leaves room for human error in data handling.

Calibration is another weak spot. Even models with decent strike rates can struggle if their predicted probabilities don’t align well with actual outcomes. These limitations make it clear why relying solely on single models can be risky and why a more diversified approach might yield better results.

Advantages of Multi-Model Consensus in Betting

How Multi-Model Systems Work

Multi-model systems combine predictions from various models to produce more reliable forecasts. They use techniques like majority voting, weighted averaging, stacking, and boosting. Here's how they work:

- Majority Voting: The final prediction is based on the choice most models agree upon .

- Weighted Averaging: Models with stronger past performance are given more influence in the final prediction .

- Stacking (or Meta-Learning): A meta-model is trained to determine the best way to blend predictions from base models .

- Boosting: New models are built sequentially, each focusing on correcting errors from the previous ones .

The strength of multi-model systems lies in their diversity. By combining models that rely on different features and decision-making processes, these systems reduce the impact of any single model's shortcomings . This diversity ensures a more balanced and effective performance.

Improved Accuracy and Reduced Errors

Studies reveal that blending forecasts can cut errors by about 12% compared to relying on a single model. For example, in NBA betting, individual models typically reach accuracies between 67.7% and 69.97%. However, high-confidence ensemble systems can achieve accuracies as high as 84.35%. This improvement translates into more dependable strategies and better identification of value bets for sports bettors.

Interestingly, research highlights that calibration often matters more than sheer accuracy in sports betting:

"For the sports betting problem, model calibration is more important than accuracy." - Conor Walsh, Department of Computer Science, University of Bath

Multi-model systems excel in calibration, as they not only reduce noise but also address bias while extracting meaningful signals. While simple averaging reduces noise, more sophisticated methods - like Bayesian aggregators and stacking - tackle noise and bias simultaneously, leading to better signal extraction.

Comparison Table: Single vs. Multi-Model Performance

| Metric | Single Model | Multi-Model Consensus |

|---|---|---|

| Typical NBA Accuracy | 67.7%–69.97% | Up to 84.35% (high-confidence) |

| Error Reduction | Baseline | ~12% improvement |

| Average ROI (Accuracy-Based) | -35.17% | +5.56% |

| Average ROI (Calibration-Based) | N/A | +34.69% |

| Overfitting Risk | High | Low (diversity reduces it) |

| Reliability | Sensitive to input changes | Robust; errors offset each other |

| Value Bet Detection | Poor (mirrors bookmaker) | Strong (identifies mispricing) |

The true strength of multi-model systems lies in their ability to not only outperform the best individual models but also reduce risk. As Michèle Hibon, Senior Research Fellow at INSEAD, puts it:

"The advantage of combinations is not that the best are better than the best individual forecasts... but that selecting among combinations is less risky than selecting among individual forecasts."

Key Factors Driving Multi-Model Success

Model Diversity and Feature Engineering

Multi-model systems thrive by blending algorithms that make different types of errors. As IBM Research explains:

The greater diversity among combined models, the more accurate the resulting ensemble model.

This concept ties back to the bias-variance tradeoff. A single model might lean too heavily toward bias or variance, but combining models balances these tendencies, preserving their strengths while minimizing overall error.

Feature engineering plays a crucial role in boosting performance. Metrics like Elo ratings, Expected Goals (xG), and Player Efficiency, along with variables such as rivalry intensity (measured using conditional logit regression), offer unique perspectives. Each model interprets these signals differently, adding depth to predictions.

By combining diverse inputs, consensus techniques further sharpen prediction accuracy.

Consensus-Based Outlier Detection

Consensus methods, such as trimmed means or medians, help manage outlier predictions by reducing the weight of extreme values. This approach minimizes the influence of any one model’s errors.

The result? Better-calibrated predictions - essential for aligning probabilities with real-world outcomes. This precision is especially valuable for bankroll strategies like the Kelly Criterion. While simple averaging can reduce noise, advanced stacking techniques go a step further, cutting bias and extracting clearer signals.

These methods are vital for platforms aiming to provide trustworthy and accurate betting insights.

How WagerProof Uses Statistical Models

WagerProof serves as a prime example of multi-model success. The platform integrates a variety of statistical sub-models, from simulations to real-time news analysis, to produce well-calibrated win probabilities. Its Edge Finder tool identifies value bets by comparing prediction market spreads and surfacing outliers.

Unlike opaque "black-box" pick services, WagerProof prioritizes transparent, data-backed recommendations. It combines professional betting data - such as prediction markets, historical statistics, public money trends, and statistical models - into a single, streamlined interface. WagerBot Chat taps into live data sources to deliver insights without fabricating information, while Real Human Editors ensure high-value opportunities are validated with detailed explanations of the math behind them. This mix of techniques showcases how multi-model systems can significantly enhance betting accuracy.

Practical Implications for Bettors

Single Models: Hitting a Ceiling

When using single models, accuracy for predicting NBA outcomes tends to max out at around 69.97%. But here's the catch - accuracy alone doesn't guarantee success. Even with a 70% win rate, poor calibration of probability estimates can lead to losses. For instance, in NBA betting experiments, models focused purely on accuracy produced an average ROI of -35.17%.

Conor Walsh and Alok Joshi summed it up well:

A highly accurate predictive model is useless as long as it coincides with the bookmaker's model

In simpler terms, if your model mirrors how sportsbooks calculate odds, you're not finding any real edge - you’re just playing along with market prices. This is why bettors relying solely on single models often struggle to grow their bankrolls.

On the other hand, multi-model systems take a different approach, addressing calibration issues and uncovering opportunities that single models miss.

Multi-Model Systems: Unlocking Market Inefficiencies

Multi-model systems outperform single models by addressing their core weakness - calibration mismatches. By combining multiple algorithms, these systems can identify market inefficiencies that would otherwise go unnoticed. For example, calibration-based model selection in experiments delivered an average ROI of +34.69%, with the best-performing setups reaching +36.93% ROI.

Take WagerProof as an example. Its Edge Finder tool uses a mix of statistical models, prediction markets, and public betting data to highlight outliers and value bets. When the spreads predicted by the market don't align with statistical models, the system flags these discrepancies as potential opportunities. WagerBot Chat adds another layer by tapping into live professional data sources to analyze betting options in real time, while Real Human Editors review and validate high-value picks, explaining the math behind them.

This multi-layered strategy helps bettors find "fade opportunities", where public sentiment has skewed the odds away from the true probabilities. It also identifies mismatches that single-model users often miss. Paired with disciplined approaches like fractional Kelly betting, this method offers a path to consistent profits.

Comparing Sports Betting Models

Conclusion: Choosing the Right Approach for Better Betting

When it comes to boosting betting ROI, multi-model consensus clearly outshines single-model strategies. Single models often mirror sportsbook odds too closely, leaving little room to capitalize on pricing inefficiencies. In contrast, multi-model systems cut through the noise and reduce bias, delivering a more refined and actionable edge. Research backs this up, showing that focusing on calibration - rather than just accuracy - leads to noticeably higher ROI.

Ville Satopää and his research team highlight that while simple averaging reduces noise, more advanced methods like prediction markets and Bayesian aggregators go further. They improve signal extraction and tackle both noise and bias simultaneously.

WagerProof takes full advantage of this multi-model strategy with its Edge Finder, which integrates statistical models, prediction markets, and public betting data to pinpoint outliers and value bets. Meanwhile, WagerBot Chat taps into live professional data for real-time insights, and Real Human Editors ensure high-value picks are validated and explained. This layered, transparent approach helps bettors uncover inefficiencies that single-model systems often miss. It's the backbone of WagerProof's comprehensive suite of tools.

For bettors who want to harness these insights, WagerProof offers plans tailored to different needs. The Free Plan provides a demo of the Edge Finder, while the Premium Plan unlocks full access to tools like the AI Game Simulator, WagerBot Chat, historical analytics, and a private Discord community. For professional groups, the Enterprise Plan offers custom integrations and advanced analytics.

Whether you're a casual bettor or a seasoned pro, prioritizing calibration over raw accuracy and embracing multi-model consensus can give you a real edge. WagerProof delivers professional-grade analysis in a user-friendly format, combining transparent data with actionable insights to help you make smarter bets.

FAQs

Why do multi-model systems often deliver better returns than single models?

Multi-model systems work by blending predictions from several independent models, creating a more reliable and accurate outcome. Why? Because combining different perspectives helps balance out the flaws or biases that might exist in any single model. This idea is backed by statistical theories like the Condorcet Jury Theorem, which suggests that pooling judgments increases the likelihood of getting things right.

Research backs this up too. Multi-model ensembles have been found to cut forecasting errors by about 12%, and in some cases, they even outperform the top-performing individual model. For bettors, this translates to steadier, more profitable decisions over time.

How does calibration improve betting accuracy?

Calibration - how well a model's predicted probabilities match actual outcomes - is a key factor in making profitable betting decisions. Even if a model is highly accurate, it can mislead bettors if it consistently over- or underestimates win probabilities. This mismatch can lead to missed opportunities or paying more than necessary when odds don’t reflect reality.

When predictions are properly calibrated, bettors can spot mispriced lines and make more informed choices. For example, strategies like Kelly staking become much more effective when backed by well-calibrated probabilities. Research shows that focusing on calibration, rather than just accuracy, can dramatically improve returns - sometimes turning losses into profits over the course of a season. Tools like WagerProof provide real-time data and resources to help ensure your probability estimates align with actual results, boosting both precision and profitability.

Why is it important to prevent overfitting in single-model predictions?

Preventing overfitting is crucial because a model that's overly tuned to historical data might excel at analyzing past games but falter when predicting future outcomes. Essentially, it becomes too fixated on specific patterns from the past, making it less dependable when faced with new, unpredictable scenarios.

For bettors, this can mean making poor choices and overlooking profitable opportunities. A model that generalizes well to fresh data is more likely to deliver accurate and consistent predictions - qualities that are essential for smarter betting decisions.

Related Blog Posts

Ready to bet smarter?

WagerProof uses real data and advanced analytics to help you make informed betting decisions. Get access to professional-grade predictions for NFL, College Football, and more.

Get Started Free